Lately, the common theme in emerging identity security technology has been… well, you know. AI. It’s all anyone wants to talk about. All of us in the IAM business have been scurrying to find a way to tell our customers and the market that, yes! we have AI! we've had it all along! If that were so obviously true, then why are we having to tell you about it now?

As an identity security technologist, I’ve experienced the growing pains of AI in IAM.

Today I read a very interesting essay from a science fiction author which distilled one main insight about AI that could be highly relevant for identity security: AI is best used for tasks that are easy, but time consuming, because it can do easy things very fast. This is much like what I hear from our customers about AI. They don’t need AI to solve hard problems that people with actual “official” intelligence are not capable of solving. They want AI to do the tedious grunt work to free up their critical IT staff to do the hard work that AI is unsuited to.

If an AI has access to data, then it can be relied upon to accurately report on the content of the data, often as a summary, provided you know what to look for. What it can’t do nearly as well as actual people is know what to look for, or interpret the results, turning it into an actionable plan.

Your next question should be, “does your product do that?” The answer is, “yes, but.”

The “yes,” in one example, from Identity Manager, is concerning role-based access control, the hot topic in AI for the past year. The mythical “easy button.” Of course, once you scratch the surface, it turns out what AI can do for RBAC unassisted is not exactly what you want. If we dig deeper into the entire reason for RBAC to begin with, it might be summarized like this: automate access assignment, providing the minimum appropriate access for all users according to what they need in order to do their jobs, while maintaining security policies to create an environment of least privilege. This sounds like it might be very time consuming, so AI is a natural fit, right? Maybe not.

At the same time that we are asking for this easy button for roles, we are also recognizing we have a problem with visibility of access, and perhaps users might currently have oversubscribed or inappropriate access. The data that the AI can access has a potentially high error rate. The AI will make RBAC policies based on what is currently assigned, without regard for whether it’s appropriate. So now, you may have automated replication of the very mess you started with, and you will have created not only risk, but you will have queued up a lot of work that AI is not well suited to do: clean up these automatically-created roles.

Identity Manager does use AI to assist in role management, but it does it by doing what AI does best. It analyzes the data and provides contextual information to people who are tasked with creating and maintaining roles, but leaves the judgment portion to the real human beings who are responsible and accountable for their choices.

Identity Manager uses machine learning, an expression of AI, to perform peer group analysis and make recommendations not only for role management, but also to help recommend which requestable items should be requested by a user, and to make recommendations on access reviews and attestations.

That was the ‘yes.’ Here’s the ‘but.’

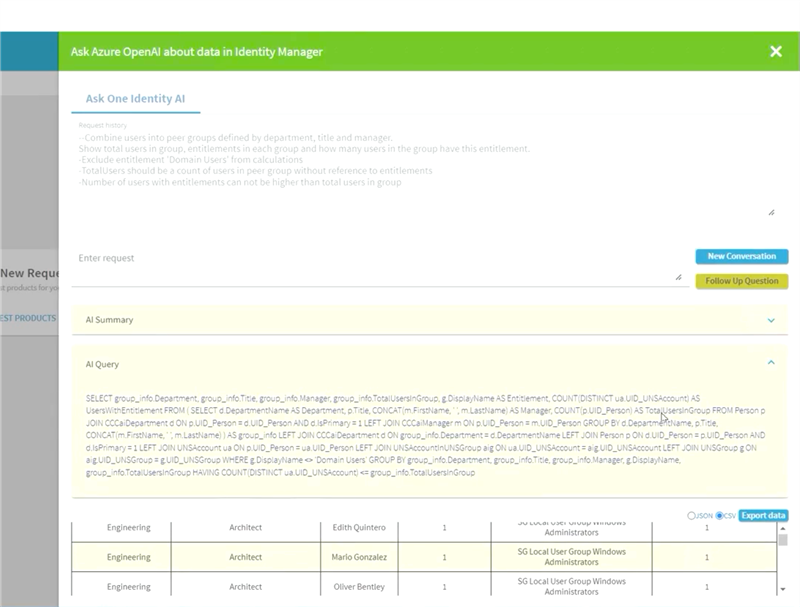

There is an entire category of useful information your data may be hiding that AI can assist with, in this case using the latest hot AI tech, generative AI. At our last Unite customer conference, some of our pre-sales architects showed an integration between Identity Manager and Azure OpenAI. Inside Identity Manager’s web portal, users can use natural language questions to pull data out of the whole of Identity Manager’s identity data catalog. The response is listed in the UI, along with a query that can be used in Identity Manager to perform this same function later on, such as to create a role, policy or report.

In this example, a conversation was conducted with the AI in order to refine and build this query, and the tabular results are shown along with what would be a very difficult SQL query if a human were to have to come up with it. The AI is very good at doing laborious technical tasks like creating SQL queries, but it needs to be told what to look for, often in an iterative way. The user began this with one question, and then refined it after each response, in this sequence:

- Show the top 10 users with the riskiest entitlements. Include Department.

- Combine users into peer groups defined by department, title and manager. Show total users in group, entitlements in each group, and how many users in the group have this entitlement.

- Exclude entitlement ‘Domain Users’ from calculations.

- TotalUsers should be a count of users in peer group without reference to entitlements.

- Number of users with entitlements cannot be higher than total users in group.

The AI responded with the following table and SQL query, which could be used to create a policy, report, or attestation:

This example utilizes Identity Manager’s web portal customization capability to integrate with a 3rd party AI to greatly enhance the utility and effectiveness of your IGA program. Imagine what other questions you could ask the AI to unlock insights and uncover risks in your data.

You don’t have to wait for our engineering team to build this capability for you. Identity Manager can apply these innovations in the field today.

In this world of AI-assisted everything, the sky is the limit in what you can do. AI is a potential force multiplier in your IGA program, enabling you to focus on what’s most important.